Python remains the go-to programming language for artificial intelligence, thanks to its simplicity and a powerful ecosystem of libraries. These Python libraries for AI researchers streamline everything from data manipulation and numerical computing to model training and deployment. Whether you’re rapidly prototyping or pushing models into production, the right tools can significantly boost efficiency and performance. In this guide, we highlight the top 10 Python libraries every AI practitioner should know in 2025—essential resources for accelerating your AI workflow and staying ahead in the field.

Comparison Table: Top 10 Python Libraries for AI Researchers

| Library | Primary Use | Best For | Notable Feature |

|---|---|---|---|

| NumPy | Numerical computing | Array and matrix operations | Vectorized performance |

| Pandas | Data manipulation | Preprocessing structured data | Intuitive DataFrames |

| Matplotlib | Data visualization | Static plots | Customizable plots |

| TensorFlow | Deep learning framework | Production-level deep learning | TensorBoard, model serving |

| PyTorch | Deep learning framework | Research & prototyping | Dynamic computation graph |

| Scikit-learn | Classical ML models | Regression, classification | Easy-to-use APIs |

| Requests | HTTP requests | Accessing web APIs | Simple syntax for API calls |

| Keras | High-level NN API | Beginners in DL, fast prototyping | Integrated with TensorFlow |

| Seaborn | Statistical visualization | Heatmaps, pair plots | Built on Matplotlib |

| Plotly | Interactive plots & dashboards | Web-based visualizations | Real-time & 3D graphing |

1. NumPy

The Backbone of Numerical Computing

Use Case:

Efficient manipulation of multi-dimensional arrays in AI and ML workflows.

Code Example:

import numpy as np

a = np.array([1, 2, 3])

b = np.array([4, 5, 6])

print(a + b) # Output: [5 7 9]Link:

🔗 Visit NumPy Official Site →

NumPy provides fast, vectorized operations on arrays and matrices. It supports a wide range of mathematical and statistical functions. Widely used in preprocessing data for AI models. Essential for simulations and scientific computing workflows.

2. Pandas

Data Wrangling Made Easy

Use Case:

Cleaning, transforming, and analyzing tabular datasets.

Code Example:

import pandas as pd

df = pd.read_csv("data.csv")

print(df.head())Link:

🔗 Visit Pandas Official Site →

Pandas is a powerful Python library used for data manipulation and analysis. It offers two main data structures: Series (1D) and DataFrame (2D) for handling labeled and structured data efficiently. With Pandas, users can clean, transform, and analyze data with ease. It’s widely used in data science and machine learning workflows.

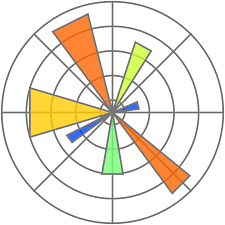

3. Matplotlib

Visualize the Patterns

Use Case:

Plotting graphs for model performance and data insights.

Code Example:

import matplotlib.pyplot as plt

plt.plot([1, 2, 3], [4, 5, 6])

plt.show()Matplotlib is Python’s go-to library for creating static, animated, and interactive visualizations. Commonly used to plot model performance and data trends. Highly customizable for publication-quality graphs. Works well with NumPy and Pandas

4. TensorFlow

Scalable Deep Learning Framework

Use Case:

Designing, training, and deploying deep learning models in production environments.

Code Example:

import tensorflow as tf

print(tf.reduce_sum(tf.random.normal([1000, 1000])))

TensorFlow is an open-source machine learning framework developed by Google. It’s designed for building and deploying ML models at scale, supporting both deep learning and traditional ML workflows.

5. PyTorch

Flexible Deep Learning Framework

Use Case:

Creating and training neural networks using dynamic computation graphs.

Code Example:

import torch.nn as nn

model = nn.Sequential(nn.Linear(10, 1))

print(model)

PyTorch is a flexible deep learning framework known for its dynamic computation graphs and intuitive syntax. It simplifies model building and debugging, making it ideal for developers and researchers. Widely adopted in both academia and industry, PyTorch excels in rapid experimentation and production deployment.

6. Scikit-learn

Classical Machine Learning Simplified

Use Case:

Training and evaluating traditional ML models like regression and classification.

Code Example:

from sklearn.linear_model import LinearRegression

model = LinearRegression().fit([[0], [1]], [0, 1])

print(model.coef_)

Scikit-learn provides simple tools for machine learning, model evaluation, and data preprocessing. Best suited for structured datasets. Built on NumPy, SciPy, and Matplotlib.

7. Requests

Elegant HTTP for Humans

Use Case:

Making HTTP requests to fetch or post data to web APIs.

Code Example:

import requests

r = requests.get("https://api.github.com")

print(r.status_code)

Link:

🔗 Visit Requests Official Site →

Requests simplifies making HTTP requests in Python. Ideal for consuming APIs and automating web data interactions. Handles authentication, sessions, and headers effortlessly.

8. Keras

High-Level Neural Networks API

Use Case:

Building deep learning models quickly with minimal code.

Code Example:

from keras.models import Sequential

from keras.layers import Dense

model = Sequential([Dense(1, input_shape=(10,))])

model.summary()

Link:

🔗 Visit Keras Official Site →

Keras provides a high-level interface for creating neural networks. It runs on top of TensorFlow and supports fast experimentation. Ideal for beginners and rapid prototyping. Offers built-in utilities for layers, optimizers, and metrics.

9. Seaborn

Statistical Data Visualization

Use Case:

Creating clear, statistical visualizations for data analysis.

Code Example:

import seaborn as sns

df = sns.load_dataset("iris")

sns.pairplot(df, hue="species")

Seaborn is a high-level visualization library built on Matplotlib. It offers beautiful default themes and powerful functions for statistical plots. Excellent for exploring relationships and trends in data. Integrates smoothly with Pandas.

10. OpenCV

Real-Time Computer Vision

Use Case:

Image and video processing in AI, robotics, and surveillance.

Code Example:

import cv2

img = cv2.imread("image.jpg")

cv2.imshow("Image", img)

cv2.waitKey(0)

Link:

🔗 Visit OpenCV Official Site →

OpenCV is a powerful library for real-time computer vision. It supports facial detection, object tracking, and image transformations. Widely used in AI, drones, and AR systems.

Conclusion

Mastering these top 10 Python libraries for AI researchers and engineers with powerful tools to build, analyze, and scale intelligent systems. Each library serves a unique purpose—from numerical computation and data processing to visualization and deep learning. Leveraging them effectively can streamline your workflow, enhance model performance, and speed up development cycles. As the AI landscape evolves, staying proficient in these tools remains a vital part of any data-driven career.